Election Day Lessons for the Data Scientist

Election Day Lessons for the Data Scientist

Earlier this month…

Kim Hypothetical, FCAS, is a pricing actuary in the Large Accounts department at Hypothetical Insurance (no relation). It is just before lunch. Kim wonders “Is traditional actuarial work the best choice for me today? Maybe I should be a predictive modeler.” Kim makes a note to investigate the new iCAS designation…

Hypothetical’s Chief Enabler of Opportunities walks over to Kim’s tiny open-plan solution conception pod. A rush project has just come in from their favorite broker at Gal Benmeadow. Lectral College, a large claims administration client of Gal’s and Hypothetical’s, is worried about its aggregation risk. Lectral has locations in every state and a substantial total exposure. The broker is working with Lectral to consider an aggregate stop loss attaching “in the middle of the distribution”. Good news: the account has wonderful data, some of it stretching back to the Declaration of Independence—although it may not all be relevant today. The CEO asks Kim to work some numbers up to review before the end of the day.

Over lunch, Kim ponders the assignment. Straightforward. The claim history will be enough to build a frequency and severity model. The frequency of claims: likely Poisson. Trend and develop historical losses; use Kaplan-Meier to handle limited claims, and fit an unlimited severity distribution. Then frequency-severity convolution applying the prospective limits profile. Probably throw in a Heckman-Meyers convolution just to impress the CEO, Kim thinks. No need to change my dinner reservation, I will be done before six. The hardest part will be translating what the broker meant by “in the middle of the distribution”.

Back at the solution conception pod, Kim opens the data submission and begins investigating the claims. It is true there is an extensive claims history; Kim cannot recall having seen better data. A couple of clicks and Kim has a summary of historical claim frequency by state. It turns out Lectral only has one location per state, and almost of its locations have had a claim at one point or another. There is so many years of data it is hard to know how much of the data to use. Kim settles on using losses since 1972.

Claim severity contains a few surprises. Lectral’s losses are all full-limit losses. There is only a single partial loss in the history, in 2008, and that was a small loss. I can think of it as a stated amount policy, Kim thinks. That will simplify the analysis. The broker has even provided a prospective limit profile, which can be used in place of severity. Dinner’s a lock, Kim thinks.

Kim summarizes the probability of loss \(p_s\) and the stated-amount \(l_s\) for each state \(s\) (Table 1). Aggregate losses \(L=\sum_s l_s B_s\) where \(B_s\) is a Bernoulli random variable with parameter \(p_s\). Kim’s first thought is to simulate the distribution of \(L\), but then Kim has a better thought: fast Fourier transforms.

| State | Loss | Chance | State | Loss | Chance |

|---|---|---|---|---|---|

| Alaska | 3 | 0.231 | Montana | 3 | 0.032 |

| Alabama | 9 | 0.002 | Nebraska | 5 | 0.015 |

| Arizona | 11 | 0.257 | Nevada | 6 | 0.486 |

| Arkansas | 6 | 0.005 | New Hampshire | 4 | 0.609 |

| California | 55 | 0.999 | New Jersey | 14 | 0.961 |

| Colorado | 9 | 0.721 | New Mexico | 5 | 0.813 |

| Connecticut | 7 | 0.95 | New York | 29 | 0.997 |

| Delaware | 3 | 0.9 | North Carolina | 15 | 0.482 |

| Dist. Of Columbia | 3 | 0.999 | North Dakota | 3 | 0.002 |

| Florida | 29 | 0.475 | Ohio | 18 | 0.327 |

| Georgia | 16 | 0.169 | Oklahoma | 7 | 0.001 |

| Hawaii | 4 | 0.99 | Oregon | 7 | 0.914 |

| Idaho | 4 | 0.007 | Pennsylvania | 20 | 0.741 |

| Illinois | 20 | 0.979 | Rhode Island | 4 | 0.914 |

| Indiana | 11 | 0.021 | South Carolina | 9 | 0.103 |

| Iowa | 6 | 0.268 | South Dakota | 3 | 0.051 |

| Kansas | 6 | 0.021 | Tennessee | 11 | 0.018 |

| Kentucky | 8 | 0.003 | Texas | 38 | 0.044 |

| Louisiana | 8 | 0.005 | Utah | 6 | 0.027 |

| Maine | 4 | 0.773 | Vermont | 3 | 0.977 |

| Maryland | 10 | 0.999 | Virginia | 13 | 0.817 |

| Massachusetts | 11 | 0.998 | Washington | 12 | 0.968 |

| Michigan | 16 | 0.759 | West Virginia | 5 | 0.002 |

| Minnesota | 10 | 0.808 | Wisconsin | 10 | 0.774 |

| Mississippi | 6 | 0.016 | Wyoming | 3 | 0.008 |

| Missouri | 10 | 0.029 |

With a few lines of Python, Kim writes a function \(agg\) taking inputs \(p_s\) and \(l_s\) and returning the full distribution of losses.

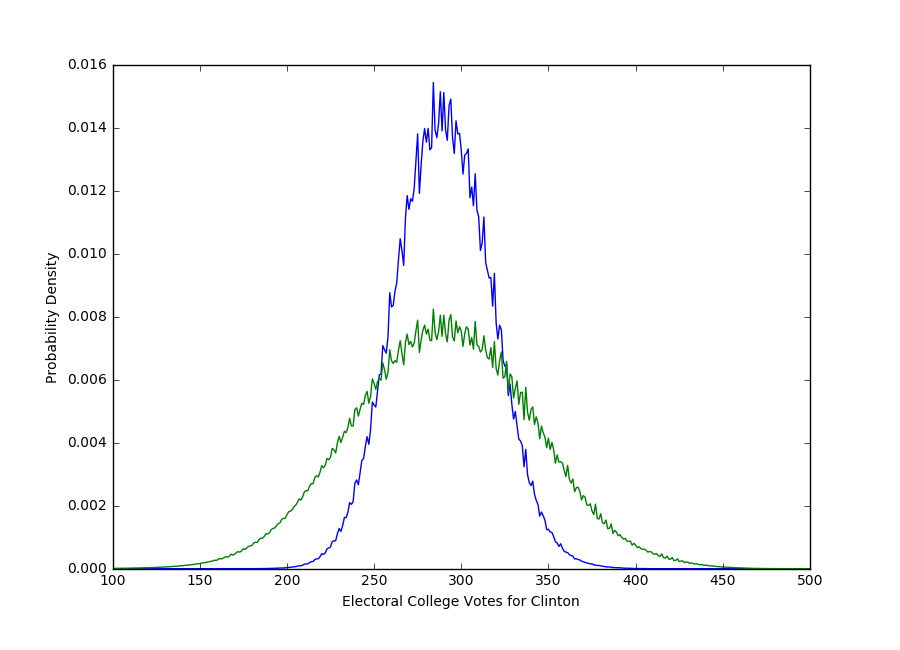

After less than an hour on the project Kim has the full distribution of aggregate losses (Figure 1, Table 2) and is ready to price whatever structure the broker proposes. The mean loss is just above the midpoint. In fact, there is a 77.1 percent chance of a loss greater than 50 percent of the aggregate limit. Next step: review with the CEO.

| \(l\) | \(\mathsf{Pr}(L=l)\) | \(\mathsf{Pr}(L'= l)\) | \(\mathsf{Pr}(L\ge l)\) | \(\mathsf{Pr}(L'\ge l)\) |

|---|---|---|---|---|

| 210 | 0.00011 | 0.00228 | 99.9% | 94.2% |

| 220 | 0.00038 | 0.00306 | 99.7% | 91.5% |

| 230 | 0.00112 | 0.00400 | 98.9% | 87.9% |

| 232 | 0.00119 | 0.00401 | 98.7% | 87.1% |

| 240 | 0.00272 | 0.00506 | 97.2% | 83.5% |

| 250 | 0.00529 | 0.00603 | 93.4% | 78.1% |

| 260 | 0.00877 | 0.00695 | 86.9% | 72.0% |

| 270 | 0.01185 | 0.00745 | 77.1% | 65.1% |

| 280 | 0.01398 | 0.00774 | 64.5% | 57.8% |

| 290 | 0.01391 | 0.00738 | 50.5% | 50.2% |

| 300 | 0.01383 | 0.00769 | 36.4% | 42.6% |

| 310 | 0.01140 | 0.00712 | 23.9% | 35.2% |

Hypothetical Insurance prides itself on its sophisticated pricing methods. It is particularly proud of its profit load algorithm, the PLA. The PLA was developed by a famous actuary many, many years ago. Central to the PLA is the idea of splitting account-level risk into process and parameter risk components and charging separately for each. Hypothetical believes all risk should be compensated and even applies a charge to process risk, unfashionable though that may be. The CEO likes to point out that Hypothetical was spared a New Zealand earthquake loss by the PLA-enforced pricing discipline. Rating agencies are impressed with its risk management. In line with modern financial thinking, Hypothetical also understands systemic parameter risk is more significant and so it is given a much larger weight in the PLA.

The CEO reviews Kim’s exhibits. “Full marks for working efficiently, and nice use of the FFT! I’m glad you saw so quickly you do not need to worry about severity—I forgot to mention that to you—but what about the risk loading?”

Kim is annoyed. How could I have forgotten about the risk loading? Think fast!

“It is predominantly process risk. Clearly there is no claim severity risk: it is a stated amounts policy. But each state uses a Bernoulli variable for claim occurrence, so the process risk of the Bernoulli coin toss swamps out any parameter risk.”

“But what is the parameter risk? How did you come up with these probabilities?” asks the CEO. Kim’s next step: build a model of the state-by-state probabilities \(p_s\) to provide the inputs for the PLA. Maybe I should become a predictive modeler Kim thinks, for the second time that day.

Poring through reams of historical state-level data, which the broker had conveniently scanned into PDF format from the original spreadsheets, Kim creates a one-parameter model for each state and estimates the parameter \(\hat\theta_s\), \(0\le \hat \theta\le 1\). The data shows \(\hat\theta_s\) is related to the experience-based loss probability \(p_s\). When \(\hat \theta<\theta_r\) then \(p\) is very small and there is no claim. When \(\hat \theta>\theta_d\) then \(p\) is close to 1 and there is a claim. In between is a gray area. The data shows \(\hat\theta_s>0.5\) generally corresponds to a claim. The data indicates values \(\theta_r=0.40\) and \(\theta_d=0.60\) and even suggests the form of the \(S\)-functions linking \(\hat\theta\) to the probability of a claim.

Kim’s state-by-state models provide an explicit quantification of parameter risk because they provide estimated residual errors \(\hat\sigma_s\) for each state. Kim is pleased. But the \(\hat\theta_s\) values for most states fall in the critical range \([\theta_r, \theta_d]\), indicating parameter risk is important for the proposed Lectral cover. Kim turns back to the data.

Kim notices there is a postmortem analysis after each loss included in the submission. It contains more accurate measures of the variables Kim used to estimate \(\hat\theta_s\). With enough effort and enough money the new information could have been known prior to the loss and so it seems reasonable to use it in the model. Kim re-calculates each model parameter with the more accurate input variables, getting \(\bar\theta_s\).

Hello, predictive modeling nirvana: by using \(\bar\theta_s\) the model has become perfectly predictive! In every case, for every state and every year, when \(\bar\theta_s>0.5\) there is a claim and otherwise not. Once computed using the best possible information the relationship between \(\bar\theta_s\) and \(p_s\) is a step function: \(p_s = R(\bar\theta_s)=1\) if \(\bar\theta_s >0.5\) and \(p_s=R(\bar\theta_s)=0\) if \(\bar\theta_s<0.5\). Kim is relieved that a value \(\bar\theta=0.5\) has never been observed but is not surprised since all the variables are continuous. (Kim passed measure theory in college.) Time is passing and Kim needs to get back to the CEO. Next step: how to build in parameter risk?

I have an unknown parameter \(\theta_s\) for each state that perfectly predicts loss, Kim thinks. I have a statistical model estimating \(\theta_s\): \(\theta_s=\hat\theta_s+\hat\sigma_s Z_s\), where \(Z_s\) a standard normal. Hence \(\hat\theta_s\) is unbiased. I know there is a claim when, and only when, the true parameter \(\theta_s>0.5\). Therefore, using the results step function I can account for parameter risk by modeling with \(\hat p_s=E(R(\theta_s))=\mathsf{Pr}(\hat\theta_s+\hat\sigma_s Z_s>0.5) = \Phi\left((\hat\theta_s-0.5)/\hat\sigma_s\right)\). The probability of dinner on time just sharply increased: the new \(\hat p_s\) agree almost exactly with the original experience-based \(p_s\).

Kim can go back to the CEO and report that the original model included parameter risk all along! And the results are the same. Just the interpretation needs to change.

In the original interpretation the model flipped a coin with a probability \(p_s\) of heads for state \(s\) and then called a claim on heads. The risk was all in the coin flip: it was all process risk.

In the new model the coin for each state is either heads on both sides (a claim) or tails on both sides (no claim). There is no coin-flip risk. Based on the estimate \(\hat\theta_s\), Kim has a prediction about each coin: \(\hat\theta_s>0.5\) corresponds to a claim, and \(\hat\theta_s<0.5\) corresponds to no claim. When \(\hat\theta_s>0.5\), Kim has confidence \(p_s=\Phi((\hat\theta_s-0.5)/\hat\sigma_s)>0.5\) that the true \(\theta_s>0.5\) and there will be a claim. And when \(\hat\theta_s<0.5\) Kim has confidence \(1-p_s>0.5\) that the true \(\theta_s<0.5\) and there will not be a claim. If \(\sigma_s=0\), then the predictions would all be perfect and all the risk disappears. For very large \(\sigma_s\), the predictions are useless and the model has the same risk as the old coin toss model,, but the new model has converted process risk into parameter risk.

If we could replicate the experiment many times then, obviously, the claims experience would be the same each time—there is no uncertainty in the coin toss when the coin has the same face on both sides! But the predictions would vary with each experiment and each state would be called correctly a proportion \(\hat p_s\) of the time. Where the old model would say “There is an \(x\) percent chance the total loss will be greater than \(l\)” the new model says “I am \(x\) percent confident the total loss will be greater than \(l\).” Kim feels ready to review with the CEO.

The CEO looks over Kim’s new workpapers. “These look very similar to your original analysis.”

“That’s true” Kim replies. “Except now I see all of the risk in the cover is parameter risk and none of it is process risk. PLA indicates a far higher risk load.” Kim explains to the CEO how the meaning of the parameters has changed.

“Excellent work!” The CEO ponders a moment longer. “There’s still one thing bothering me. I understand you are modeling \(\hat p_s\) as an expected value to allow for uncertainty in the estimate of \(\theta_s\), but you have treated each state independently. We need the full distribution of aggregate losses, which will depend on the multivariate distribution of all the estimates \(\hat \theta_s\). How are you accounting for possible dependencies between the \(\hat\theta_s\)?”

A crestfallen Kim contemplates canceling dinner. How could I have forgotten correlation?

Kim knows statistics could help give a multivariate error distribution, but Kim modeled each state differently. The \(\hat\theta_s\) were not produced from one big multivariate model. Different combinations of variables were used to model each state; some of the variables are common across all states, but many are not. Theoretic statistics will not provide an answer.

Kim realizes a mixing distribution is needed. The presence of some common variables in each state model indicates there may be underlying factors driving correlation between the estimates \(\hat\theta_s\). Kim decides to model uncertainty as though it were perfectly correlated between the states. That means modeling losses with \(\hat\theta'_s = \hat\theta_s + T\) where \(T\) is a normally distributed shared error term.

In a few more lines of Python code, Kim extends the original \(agg\) program to allow for perfectly correlated errors, producing the revised columns in Table 2 and the revised green density in Figure 1. The probability of a loss greater than 50% of the aggregate limit has dropped from 77.1 percent to 65.1 percent. “Wow! Quite a difference,” Kim notes. The new aggregate density has a higher standard deviation. The aggregate stop loss looks more promising.

Kim realizes there is a real chance of executing a profitable deal, and goes off for a last meeting with the CEO that day in a more upbeat mood. It was worthwhile spending the time to understand the modeling of the Lectral College account. After all, bonuses depend on executing profitable deals.

Adding the E and the O

Kim has, of course, been modeling the Electoral College. Variations on Kim’s original model, which produced a 77.1 percent chance of a Clinton victory, were common prior to November 8. Poll-related headlines were overwhelmingly about the high probability of a Clinton victory. A New York Times1 article from November 10 said

Virtually all the major vote forecasters, including Nate Silver’s FiveThirtyEight site, The New York Times Upshot and the Princeton Election Consortium, put Mrs. Clinton’s chances of winning in the 70 to 99 percent range.

Table 1 shows the state-by-state probabilities of a Clinton victory (“Chance of loss” columns) on Sunday morning, November 6, as reported by FiveThirtyEight2. These probabilities correspond to the \(p_s\) in Kim’s model. The loss column corresponds to the number of Electoral College votes. FiveThirtyEight quoted a 64.2 percent chance of Clinton winning—very close to the 65.1 percent estimate from Kim’s revised model.

What is missing from Table 1 are the actual proportions of voters intending to vote for Clinton, the values \(\hat\theta_s\) from Kim’s model. The relation between \(p\) and \(\theta\) turns out to be the model’s weak link—it is very sensitive around the critical 50/50 mark. Actual election modelers had enough information to estimate the relationship and should have been atune to the sensitivity. Kim’s postmortem \(\theta\) is obviously the actual proportion of Clinton voters in each state, which, with heroic effort, could have been known (just) prior to the Election3.

There are at least two arguments for using a mixing distribution as Kim did. First, there was the possible reticence of Trump supporters to publicly affirm their candidate; these supporters may have been systematically hard for pollsters to find. And second, there was a miss overall in the polling. The Economist, in the article “Epic Fail”, wrote

AS POLLING errors go, this year’s misfire was not particularly large—at least in the national surveys. Mrs Clinton is expected to [be] … two points short of her projection. That represents a better prediction than in 2012, when Barack Obama beat his polls by three.4

These comments are consistent with Kim’s revised model. The actual outcome, with 232 votes for Clinton is the 14th percentile of the outcome distribution (Table 2). It was the 1.3 percentile for the base model.

There are a number of important lessons for actuaries in how the Election was modeled and how the results were communicated. Here we will focus on the communications issues.

Communicating Risk

In our post-truth5 world we must remember that words have consequences; they influence behavior and outcomes.

Unfortunately, the goal of simple and transparent communication rarely aligns with a compelling headline. And “Election Too Close to Call: Get Out and Vote!” is not a compelling headline. On November 6, polls showed Clinton with a total of 273 Electoral College votes in states where she led (Table 1), almost the thinnest possible margin. After “sophisticated modeling,” her thin lead turns into a far more newsworthy 77 percent probability of winning. I think most readers would be surprised Clinton’s 80-90 percent probability of victory was balanced on a point-estimate of just 273 votes.

Headlines such as “273 votes…” and “80 percent…” are consistent with the facts, yet they paint different pictures in reader’s minds and could drive different actions by registered voters. They are headlines with consequences in the real world. The analysts who created them have an obligation to ensure they are fair and accurate—though, unlike actuaries, they have no professional standards to ensure they do.

The more newsworthy “80 percent” headline paints a deceptive picture. Its precision is designed to impress yet destined to mislead. The fragility of the underlying model is exactly the same as the fragility plaguing the models of mortgage default used to evaluate CDOs and CDSs: unrecognized correlations. Have we learned nothing from the financial crisis?

Actuaries write headlines about risk. We have a responsibility to ensure our headlines communicate risk completely, that our models reflect what we know and what we do not know, and that the sensitivities of our conclusions are clear. These are important considerations: our results will be relied upon by users and will influence behavior—the ASOP requirement for an actuarial report. We must avoid misleading those who rely on our work. The first required disclosure in ASOP 41, Actuarial Communications6, concerns uncertainty or risk:

The actuary should consider what cautions regarding possible uncertainty or risk in any results should be included in the actuarial report.

The standard also requires a clear presentation

The actuary should take appropriate steps to ensure that each actuarial communication is clear and uses language appropriate to the particular circumstances, taking into account the intended users.

Many, perhaps most, headline reports were not consistent with these requirements. We will never know if the misleading presentation of the Election had an impact on the result, though it is possible.

Back Story

When this article was written, I was teaching a risk management course at St. John’s University in New York called Applications of Computers to Insurance. On the Monday before Election Day I used VBA to program a simple Monte Carlo model to produce a histogram of potential election outcomes, similar to those being reported in the press and the same as Kim’s first model. The class then estimated the probability of Clinton winning and left over-confident in a Clinton victory. The article you have just read is the result of my attempts to understand what was actually going on. I think the full story turns out to have important lessons for actuaries as we pivot to a predictive modeling perspective on risk.

Footnotes

0538 forcast](http://projects.fivethirtyeight.com/2016-election-forecast/) downloaded Sunday November 6, 2016.↩︎

Modeling a social phenomenon is always difficult because the system reacts to how we understand it. Press reports claiming “Clinton victory certain” paradoxically increase doubt about her victory by changing the behavior of voters. We have seen a similar phenomenon in the housing markets and dotcom stocks: once people believe the prices can only go up they buy at any price and create an environment where a crash is inevitable. Trying to model these intricacies is beyond the scope of the paper. In spirit, in a simplified world, where voters know their own minds in advance of visiting the polling stations, \(\theta\) could theoretically be determined somewhat in advance of the actual election. We are also ignoring third party candidates.↩︎

How a mid-sized error led to a rash of bad forecasts, The Economist, November 12, 2016.↩︎

Post-truth adj. Relating to or denoting circumstances in which objective facts are less influential in shaping public opinion than appeals to emotion and personal belief: “In this era of post-truth politics, it’s easy to cherry-pick data and come to whatever conclusion you desire,” or “Some commentators have observed that we are living in a post-truth age”. Post-truth was named 2016 word of the year by Oxford Dictionaries.↩︎

http://www.actuarialstandardsboard.org/asops/actuarial-communications/↩︎