Python Logging

Python logging: the penny finally dropped

As you may know, I’m a huge fan of Python. I’ve been programming in it for over 10 years, and I continue to find it both joyful and immensely productive. The recent addition of ChatGPT as a coding coach and advisor has only supercharged that productivity.

However, over that 10-year period, one topic consistently frustrated me: logging.

At first glance, Python’s logging module feels like a Byzantine labyrinth of needless complexity. I must have read the documentation half a dozen times, tried to implement it as described, and only ended up more frustrated each time. Perhaps you’ve had the same experience.

A couple of months ago, after yet another conversation with ChatGPT, it said something that finally made the penny drop. Suddenly, I got it. I understood why the setup appears so complicated and how to use it effectively.

The insight: logging is a conversation

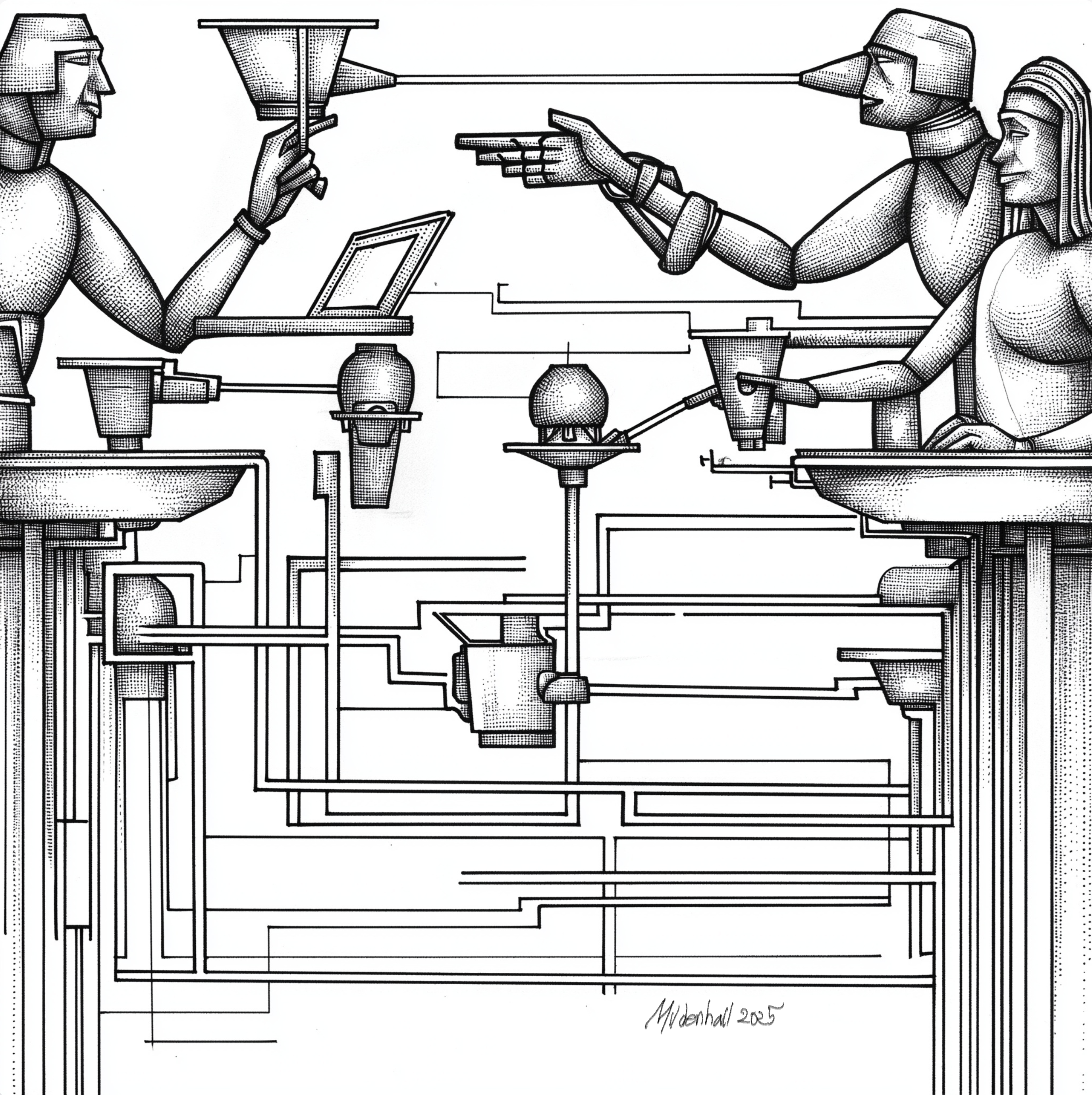

Logging is a way for your program to explain to the outside world what it’s doing. It communicates messages the user doesn’t necessarily need to see, but which the developer—and sometimes the user—will find helpful. Logging is part of a conversation between your program and its users.

And, like any conversation, there are two roles: the speaker and the listener.

In software, this speaker–listener pair is often abstracted as a client–server relationship (not a perfect analogy, but useful). Think of the “server” as a module or service and the “client” as the application. In this role, the service (speaker) sends messages about its status, processes, and errors to the application (listener).

Before I understood this split, my applications and services were doing both jobs at once—talking and also dictating how they should be listened to. That’s a bad model in software design and in life! Once I saw that the speaker’s job is simply to talk and the listener’s job is to decide how to handle that information, everything became much simpler.

This was the key insight for me: if you’re building a package or service, your only job is to talk. Your logging setup is very simple—you just emit messages. In Python, this is extremely easy to do:

import logging

logger = logging.getLogger(__name__)

def do_something():

logger.info("Starting something...")

# your logic here

logger.debug("Something finished successfully.")This “talking” part of logging is only a tiny fraction of the Python logging documentation. Most of the documentation focuses on the listening side: which messages to hear, how to filter them, where to display or store them.

If you’re building an application, you’re the listener. It’s your job to decide how to respond to the logging chatter coming from the packages and services you use.

This separation of responsibilities simplifies everything: packages just “talk,” and applications define what and how they “listen”.

How I simplified my logging setup

I mostly build packages and services, and their logging is trivial—they just emit messages. The applications, which I build far less frequently, are where to define the listening.

These days, I mostly work interactively in JupyterLab. I’ve now set up a simple YAML-based logging configuration that lets me control everything from one file:

- Which modules’ messages to listen to

- What levels to log

- Where to send the logs (console, files, web apps, etc.)

Here’s an example of a clean YAML spec with multiple handlers, formatters, and filters. Formatters transform the logging messages and handlers “listen” to them, e.g., on the console or in a file. The magic is the loggers section, which match up the application chatter (aggregate and greater_tables are two of my packages) to the handlers. The argument propagate: false is essential to stop other default handlers from duplicating messages. It’s not the simplest example, but I find it useful in practice. See Section 5 for a full explanation of what it is doing.

version: 1

disable_existing_loggers: false

formatters:

json:

class: pythonjsonlogger.jsonlogger.JsonFormatter

format: "%(asctime)s %(levelname)s %(name)s %(message)s"

rename_fields:

levelname: level

asctime: time

json_detailed:

class: pythonjsonlogger.jsonlogger.JsonFormatter

format: "%(asctime)s %(levelname)s %(name)s %(message)s %(filename)s \

%(funcName)s %(lineno)d %(process)d %(threadName)s"

rename_fields:

asctime: time

levelname: level

plain:

class: logging.Formatter

format: "%(asctime)s|%(levelname)-6s|%(name)s[%(filename)s:%(lineno)d %(funcName)s] %(message)s"

datefmt: "%H:%M:%S"

handlers:

console:

class: logging.StreamHandler

level: WARNING

formatter: plain

stream: ext://sys.stderr

working_console:

class: logging.StreamHandler

level: INFO

formatter: plain

stream: ext://sys.stderr

file_agg:

# rotate based on size

class: logging.handlers.RotatingFileHandler

maxBytes: 10485760

backupCount: 3

filename: /tmp/aggregate.json

level: INFO

formatter: json

encoding: utf8

delay: true

file_greater_tables:

# rotate daily

class: logging.handlers.TimedRotatingFileHandler

when: midnight

interval: 1

backupCount: 7

filename: /tmp/greater_tables.json

level: DEBUG

formatter: json_detailed

encoding: utf8

delay: true

file_myfin:

# rotate daily

class: logging.handlers.TimedRotatingFileHandler

when: midnight

interval: 1

backupCount: 7

filename: /tmp/my_finance.json

level: DEBUG

formatter: json_detailed

encoding: utf8

delay: true

loggers:

aggregate:

level: INFO

handlers: [console, file_agg]

propagate: false

greater_tables:

level: DEBUG

handlers: [console, file_greater_tables]

propagate: false

myfinance:

level: DEBUG

handlers: [working_console, file_myfin]

propagate: false

root:

level: WARNING

handlers: [console]With this single YAML file, I can switch focus between modules, adjust levels, and change outputs without touching any package code. I can even reuse the same setup in other applications, such as web services.

My takeaway

My applications had been “talking” (correct) but also trying to control how they were heard (incorrect). I hadn’t realized the split of roles between talking and listening—perhaps there’s a life metaphor in there somewhere!

As soon as the distinction between talking and listening—between client and server, between package and application—was made clear, all of Python’s logging system finally fell into place.

It was a great reminder that sometimes a single missing piece of knowledge can render a topic completely incomprehensible. But once that missing insight is added, everything can suddenly become crystal clear.

Appendix: Explanation of YAML File

GTP Generated

This logging configuration showcases the flexibility and power of Python’s logging system. It defines a structured, maintainable, and production-ready logging setup using a YAML config file. Here’s what it does.

Defines three formatters

json— compact JSON logs suitable for ingestion by logging systems.json_detailed— richer JSON format including filename, function name, line number, process ID, and thread name.plain— human-readable format for terminal output, including timestamps and code locations.

Defines three handlers, each controlling how and where logs are emitted.

consoleandworking_consolestream logs to stderr using the plain formatter.file_aggwrites compact JSON logs to a rotating file (based on size).file_greater_tablesandfile_myfinrotate daily and retain 7 days of detailed logs.

Defines three named loggers, customized for each package I’m currently working on or with.

aggregatelogs atINFOlevel to the console andfile_agg.greater_tableslogsDEBUGand above to the console andfile_greater_tables.myfinanceuses a dedicated console and file handler, again atDEBUGlevel.

The root logger handles all uncaptured logs at

WARNINGlevel or above.

The configuration illustrates the use of

- Rotation: to avoid unbounded log file growth.

- Encoding: UTF-8 for safe text handling.

delay: true: defers opening log files until needed, improving startup time.propagate: false: prevents duplicate logging by disabling upward propagation.

This configuration supports both human-friendly debugging during development and machine-readable logging in production—without changing any logging calls in your Python code.