What I Ask GPT

Summary

This post describes using GPT to help analyze over 3500 questions I have asked it over the last 13 months. What was I asking about? Prompted by a few short questions, GPT wrote code that

- Created a database to hold the OpenAI JSON question dump

- Loaded the data into the database

- Performed text embedding into the

openai/clip-vit-base-patch32encoder - Clustered the embeddings using K-Means

GPT saved me hours: it read the documentation for me! I knew roughly what I wanted to do, but had no idea about the specific Python program steps needed.

The result was 10 clusters which I “human identified” and further grouped into five broad question areas.

| Area | Questions | Pct Total |

|---|---|---|

| General | 697 | 45% |

| Programming | 490 | 31% |

| Text and documents | 154 | 10% |

| Science and math | 128 | 8% |

| Math | 93 | 6% |

| Grand Total | 1562 | 100% |

Finally, I wondered how GPT would tackle this question on its own—after normalizing the data. The inital results highlight the importance of prompt engineering. GPT carried out a multi-step analysis and gave me what I asked for, except I did not spend enough time to force it to cluster on semantics. It defaulted to a word-frequency and similarity clustering. Section 5 shows a second attempt. Looking at the sample questions for each cluster shows they are similar, it is just hard to characterize what it is! This is a common problem with cluster analysis.

Overall, the stand-alone GPT work was a good start and convinces me it could get to the answer I wanted with more careful prompting. If it were an intern, I would have been very impressed with its work. And I would have used far more than 91 words to explain to the intern what I wanted!

The rest of the post describes:

- Section 1: Creating an SQL database and loading the JSON data.

- Section 2: Exploratory data analysis—human driven using

pandasandmatplotlib, but with GPT help on the tricky commands. - Section 3: Clustering with GPT code followed by human interpretation and example questions.

- Section 4: Round 1 of GPT’s “go-it alone” solution.

- Section 5: Round 2.

1 Loading Data

The OpenAI GPT-bot can provide you with a data dump of all your interactions: in the menu follow settings -> data controls -> export data. It returns a JSON file with a complicated nested structure. Here is an extract:

{

"title": "LLMs Comparison: GPT4.o Excels",

"create_time": 1716128981.791355,

"update_time": 1716128991.353601,

"mapping": {

"12f8be31-3c26-4169-a897-278e18601764": {

"id": "12f8be31-3c26-4169-a897-278e18601764",

"message": {

"id": "12f8be31-3c26-4169-a897-278e18601764",

"author": {

"role": "system",

"name": null,

"metadata": {}

...I asked GPT about suitable containers and it suggested MongoDB, Couchbase or Elasticsearch. I wanted something lightweight, and it suggested SQLite. The next step was very impressive. I pasted in a sample record for a conversation and it gave me step-by-step code to build a SQL database and load the data. For example, its table to hold a conversation looks like this

# Create messages table

cursor.execute('''

CREATE TABLE IF NOT EXISTS messages (

id TEXT PRIMARY KEY,

mapping_id TEXT,

author_role TEXT,

author_name TEXT,

author_metadata TEXT,

create_time REAL,

update_time REAL,

content_type TEXT,

content_parts TEXT,

status TEXT,

end_turn BOOLEAN,

weight REAL,

metadata TEXT,

recipient TEXT,

FOREIGN KEY(mapping_id) REFERENCES mappings(id)

)

''')and to load the data

for record in data:

if mapping['message'] is not None:

message = mapping['message']

cursor.execute('''

INSERT OR REPLACE INTO messages (

id, mapping_id, author_role, author_name, author_metadata,

create_time, update_time, content_type,

content_parts, status, end_turn, weight, metadata, recipient

) VALUES (?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?)

''', (

message['id'], mapping['id'], message['author']['role'], message['author']['name'],

json.dumps(message['author']['metadata']),

message['create_time'], message['update_time'], message['content']['content_type'],

json.dumps(message['content'].get('parts')),

message['status'], message['end_turn'], message['weight'],

json.dumps(message['metadata']), message['recipient']

))

conn.commit()2 Exploratory Data Analysis

To get a feel for the data, I read it into a pandas DataFrame and extracted the question (user) and answer (assistant) parts of the messages table. I largely knew what I was doing, but did ask GPT some detailed matplotlib questions: how to plot to a second y-axis and how to create and format weekly tick marks. For example, I asked

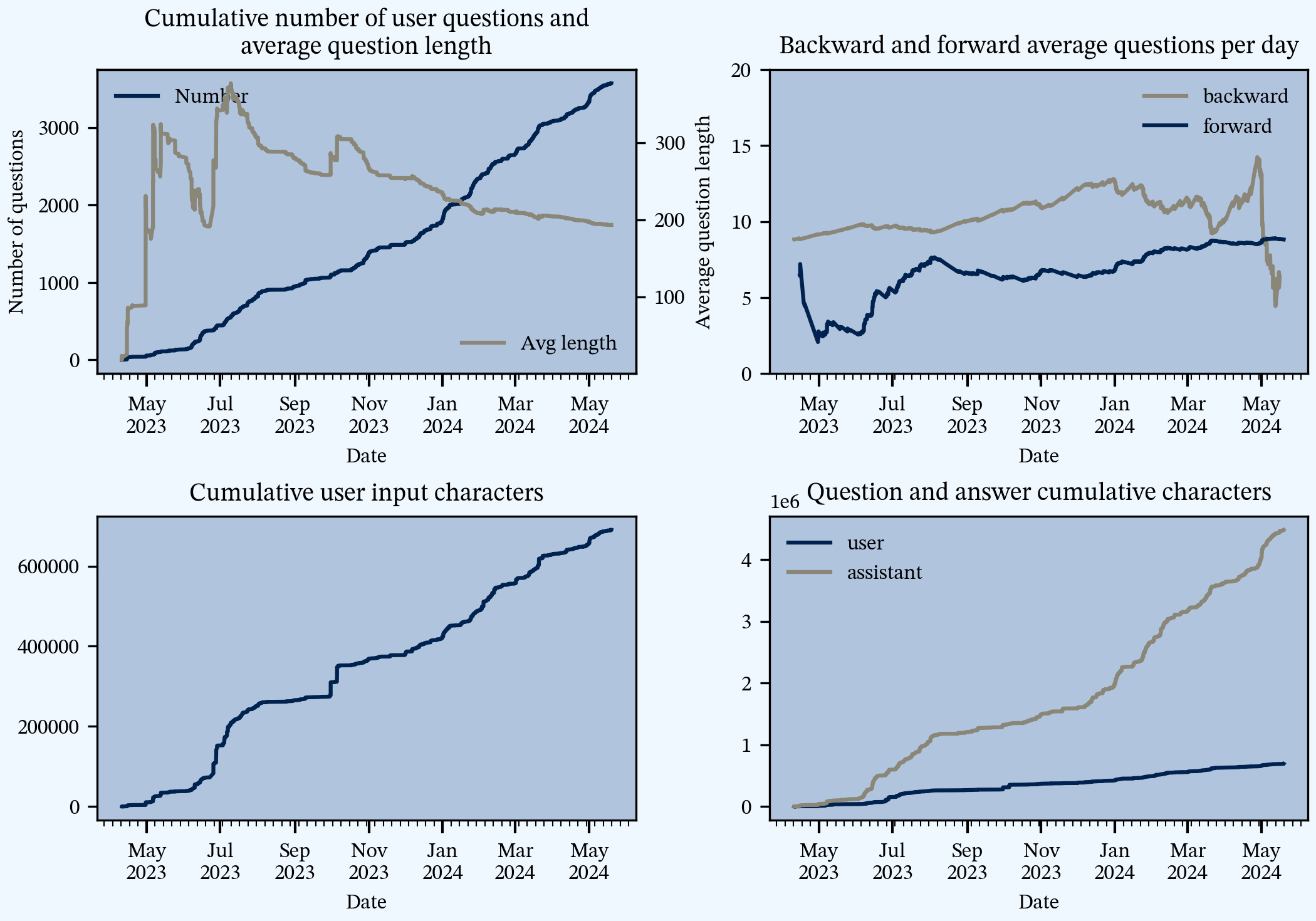

how do I plot in matplotlib using the left and right y axes with different scales?and got a spot-on answer, with an example. I still find it amazing that GPT can hone in on the answer to short questions like this so effectively. The result was the following figure. Not presentation ready graphics, but a good start.

- Top left: the blue line shows I’ve asked over 3000 in total and that my average question has about 200 characters (grey).

- Top right, backwards and forwards running average question frequency, showing that I’ve asked about 8.8 questions per day since I started using GPT.

- Bottom left, I’ve input over 600,000 characters cumulatively. The jumps are question where I asked for a summary of a long document.

- Bottom right, shows that GPT replies with much longer answers than my questions: it has written over 4 million characters to my 600,000 input. This leverage is fantastic, for example in taking notes using a q/a format.

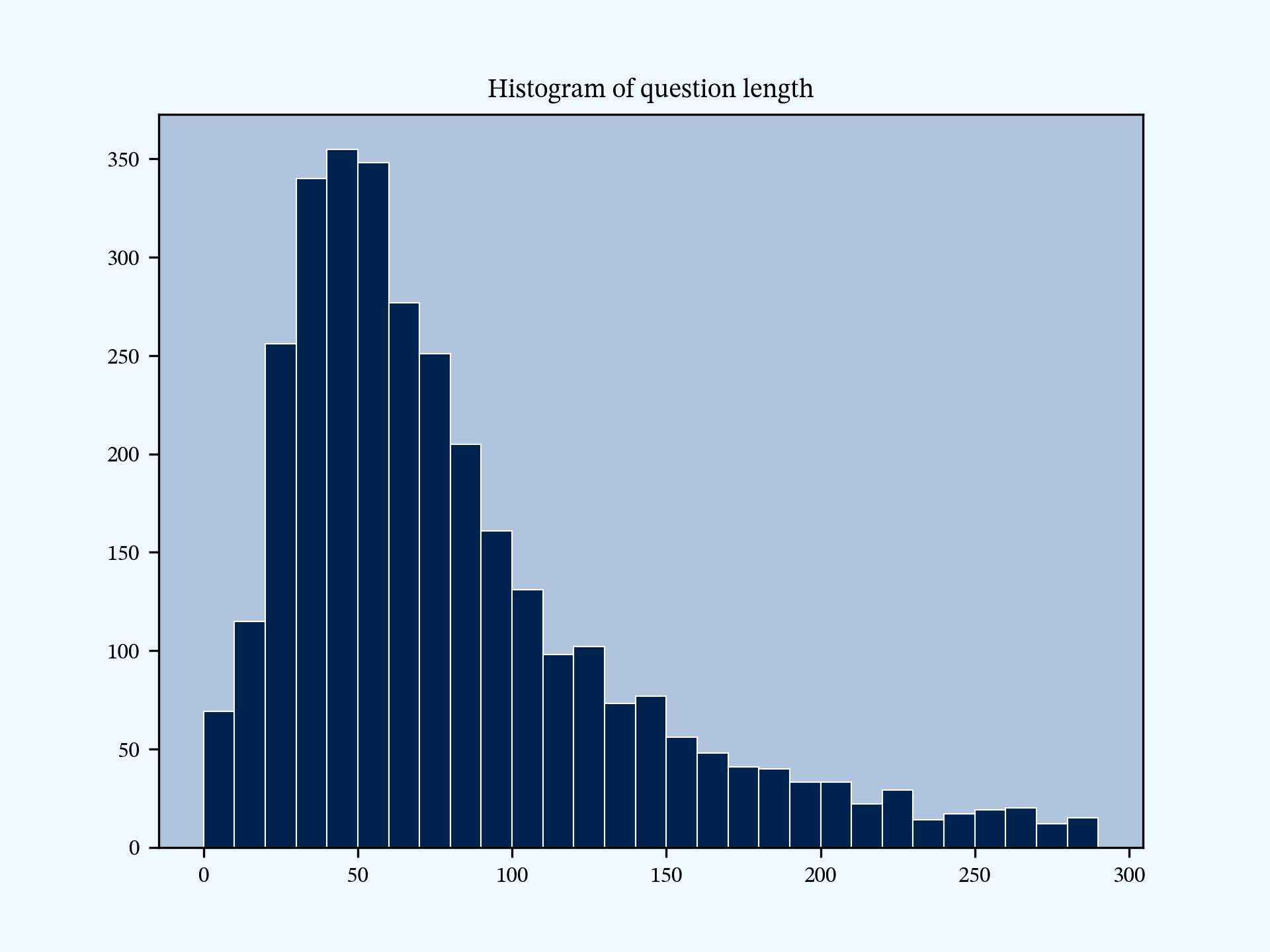

I can’t resist showing a histogram of question lengths, because it has such a nice shape. Wonderful that Pandas knocks this out in one line:

# question length histogram

ax = u0.content.str.len().hist(bins=range(0, 300, 10), ec='w', lw=.5, grid=False)

ax.set(title='Histogram of question length')3 Human Clustering with GPT Code

Next, I wanted to cluster the questions. Unlike the EDA, I don’t really know how to do this, but knew roughly what I wanted to accomplish. I asked:

how can i use python (probably pytorch and clip) to get an embedding

of each of the questions i wrote. then I want to use k-means to

cluster them. tx.GPT returned with a program and instructions on how to install the required libraries.

import torch

from transformers import CLIPTokenizer, CLIPModel

from sklearn.cluster import KMeans

import numpy as np

# Load the CLIP model and tokenizer

model_name = "openai/clip-vit-base-patch32"

tokenizer = CLIPTokenizer.from_pretrained(model_name)

model = CLIPModel.from_pretrained(model_name)

# List of questions (use your own list)

questions = [

"How do you debug a Python script with a ValueError?",

"Can you summarize the main points of a talk given with Jim?",

# ... add all your questions here

]

# Tokenize and encode the questions

inputs = tokenizer(questions, padding=True, return_tensors="pt")

# Get the embeddings from the model

with torch.no_grad():

embeddings = model.get_text_features(**inputs)

# Convert embeddings to numpy array

embeddings_np = embeddings.cpu().numpy()

# Perform K-means clustering

num_clusters = 5 # Adjust the number of clusters as needed

kmeans = KMeans(n_clusters=num_clusters, random_state=0)

kmeans.fit(embeddings_np)

# Get the cluster assignments

labels = kmeans.labels_

# Print the cluster assignments

for question, label in zip(questions, labels):

print(f"Question: {question} -> Cluster: {label}")I followed up by aksing how to get the analysis to run on my GPU. That resulted in a few back-and-forths because of a GPU memory error, which was eventually solved.

Informed by the histogram, I trimmed my questions down to those with length between 10 and 250 characters. I subsetted to every other input (1562 entries), ran through the embedder and clustered into 10 groups. I then inspected sample questions in each cluster and provided a human-intelligence interpretation.

| Cluster | Questions | Pct | Interpretation |

|---|---|---|---|

| 0 | 175 | 11.2% | General |

| 1 | 173 | 11.1% | Programming |

| 2 | 145 | 9.3% | General |

| 3 | 157 | 10.1% | Programming |

| 4 | 238 | 15.2% | General |

| 5 | 128 | 8.2% | Science and math |

| 6 | 93 | 6.0% | Math |

| 7 | 160 | 10.2% | Programming |

| 8 | 139 | 8.9% | General |

| 9 | 154 | 9.9% | Text and documents |

Here are random samples of three questions from each cluster.

Cluster 0, length 175: general

- tell me more about ngrok Access Controls

- Tell me about the impact of the Black Death in England

- Is refined olive oil, or also known as light olive oil?

Cluster 1, length 173: programming

- If there are no commits to master after you start branch A, then does the rebase have any effect?

- How do I tell if my sqlite3 is thread safe?

- appdata has local, locallow and roaming flavors. what is the purpose of each and which should I use?

Cluster 2, length 145: general

- What part of speech is each word in your first example?

- can you use a binary f string?

- numfig is a global variable?

Cluster 3, length 157: programming

- remind me again, how to I include raw html in rst?

- remind me (yet again) how I do a foot note in markdown!

- In quarto blog, is there a way to “pin” some posts (manually) on the main index.qmd page?

Cluster 4, length 238: general

- Tell me about the Harrying of the north

- Do I need $$ around that?

- What other handy calls can I make?

Cluster 5, length 128: science and math

- Going back to the orders for trees, Fagales, Lamiales, and so forth, what are the orders for all trees (deciduous or conifer) and what characterizes them?

- I want as short an email address as possible on icloud. What two or three character addresses do you suggest I try that might still be available? All the obvious ones have gone.

- I believe that Goodsteins theorem, that the Goodstein sequence always terminates in 0 is an example of an elementary Gödel statement. Is that correct?

Cluster 6, length 93: math

- Can you give me a good simple example of a martingale that does not have independent increments

- how is it used to create a sigma algebra and sub sigma algebra with no regular conditional expection?

- what is the usual math symbol for the fractional part?

Cluster 7, length 160: programming

- Pls show me how the multi headers and spanning rows and columns work with an example.

- how do I include one file in another in quarto blog? html

- how do I increment or set the 0.0 part?

Cluster 8, length 139: general

- Just write three haikus about probability

- What is the police caution issued to people arrested in the UK?

- Journalism is called the fourth estate. What are the other three estate?

Cluster 9, length 154: text and documents

- what encoding does Mendeley use for its bibtex file?

- i have a quarto beamer file. i just want it to make the tex file (it needs more processing) not to run pdflatex. how do i do that from the command line

- i want to create a right click context item, that appears near the top of the list, for windows explorer that opens sublime text on the directory selected. how can i do this?

4 GPT Stand-alone Clustering

How would GPT answer this question on its own? To find out, I asked the following question—using only 91 words!

The attached file is a list of over 3500 questions I have asked you over the last 13

months. It is a markdown list of numbered bullets. Please cluster it into 10

clusters by type of question. The provide a table showing cluster number, number

of questions in the cluster, proportion of total questions, and a description of the

type of question. Finally, output three examples from each cluster, under a third

level markdown heading including the description of the cluster and number of

questions matching the values in the table.Here is its answer. It provided the following Python, which showed only the mundane part of the analysis. Alas, from this we have no idea how the clusters were formed.

import ace_tools as tools

# Create output tables and format the result

tools.display_dataframe_to_user("Cluster Statistics", cluster_stats)

cluster_examples = {}

for cluster in range(num_clusters):

examples = example_questions[example_questions['cluster'] == cluster]['question'].tolist()

cluster_examples[cluster] = examples

# Format output

output = []

for _, row in cluster_stats.iterrows():

cluster = int(row['cluster'])

num_questions = row['num_questions']

description = row['description']

examples = cluster_examples[cluster]

output.append(f"### Cluster {cluster}: {description} ({num_questions} questions)")

for example in examples:

output.append(f"- {example}")

output_text = "\n".join(output)

output_text[:2000] # Display first 2000 characters of the output text to verifyHere are the clustered questions along with the descriptions.

GPT Clustering Version 1

Cluster Statistics

| Cluster | Number of Questions | Proportion | Description |

|---|---|---|---|

| 5 | 2389 | 0.676 | like, make, set, table, want |

| 1 | 254 | 0.072 | does, file, mean, using, work |

| 7 | 231 | 0.065 | file, markdown, python, using, way |

| 2 | 200 | 0.057 | command, file, use, want, windows |

| 3 | 190 | 0.054 | function, python, using, want, write |

| 9 | 108 | 0.031 | can, make, new, using, write |

| 8 | 77 | 0.022 | code, does, function, mean, want |

| 4 | 53 | 0.015 | file, want, make, need, new |

| 0 | 26 | 0.007 | mean, set, using, want, write |

| 6 | 9 | 0.003 | mean, set, make, using, want |

Cluster 5: like, make, set, table, want (2389 questions)

- I am really enjoying the book “The Earth: A very short introduction” by Martin Redfern. What longer treatments of the same topics (earth sciences, history of earth, structure of earth) for the non-expert can you recommend?

- Based on this entire conversation and any other background you have, I am looking for a tagline for my aggregate software. This should be a one line, 4-8 word summary of what it can do, who it can help, and why it’s great. Please suggest 10 possible t…

- How can you control the size of images in a bootstrap carousel?

Cluster 1: does, file, mean, using, work (254 questions)

- How does it decide which are my files? Specifically, I have data in users but also in . Will the latter be kept?

- What does it mean that a food contains protein?

- What does {{{x}}} mean in qmd?

Cluster 7: file, markdown, python, using, way (231 questions)

- Can you explain that in more detail using equations.

- Tx. final change: the options are images. They should be discovered using Path.glob() from an image_dir variable I will set. Change index comparison to display the two images, scaled to the same size, 50% screen width. Have the selection be click on t…

- Is there a difference between Lazy Import Within Function and Using Importlib (items 1 and 2 on your list)?

Cluster 2: command, file, use, want, windows (200 questions)

- How do I use nbconvert from the command line to output HTML but to exclude the input cells of the original notebook?

- I use Edge browser. Is there some way to set it up with a given set of pinned tabs (email etc.) that auto open?

- What if I want to apply a function to each element’s author field and use it to update the field?

Cluster 3: function, python, using, want, write (190 questions)

- I want to set up a python job that looks for changes in filename.db and when it sees a change it triggers a job to run. How best to do that? Windows from a dos p…

- Can you write a python script to parse XML data and convert it to JSON format?

- How can I use functools to memoize a function in Python?

Cluster 9: can, make, new, using, write (108 questions)

- Can you write a function to reverse a string in Python?

- How can I make a new user in Linux?

- Is there a way to write a script that automates the backup process using rsync?

Cluster 8: code, does, function, mean, want (77 questions)

- What does this piece of code mean in Python?

- How does this function work in JavaScript?

- Can you explain what the following code does in Python?

Cluster 4: file, want, make, need, new (53 questions)

- I want to make a new directory and move files into it using Python.

- How do I create a new file and write data to it in Python?

- What do I need to do to make a new project in Django?

Cluster 0: mean, set, using, want, write (26 questions)

- What does it mean to set a variable in Python?

- How can I use the

setdata structure in Python? - I want to write a function that uses a set to remove duplicates from a list.

Cluster 6: mean, set, make, using, want (9 questions)

- What does it mean to make a set in Python?

- How can I make a new set using a list in Python?

- I want to use a set to filter unique values from a list.

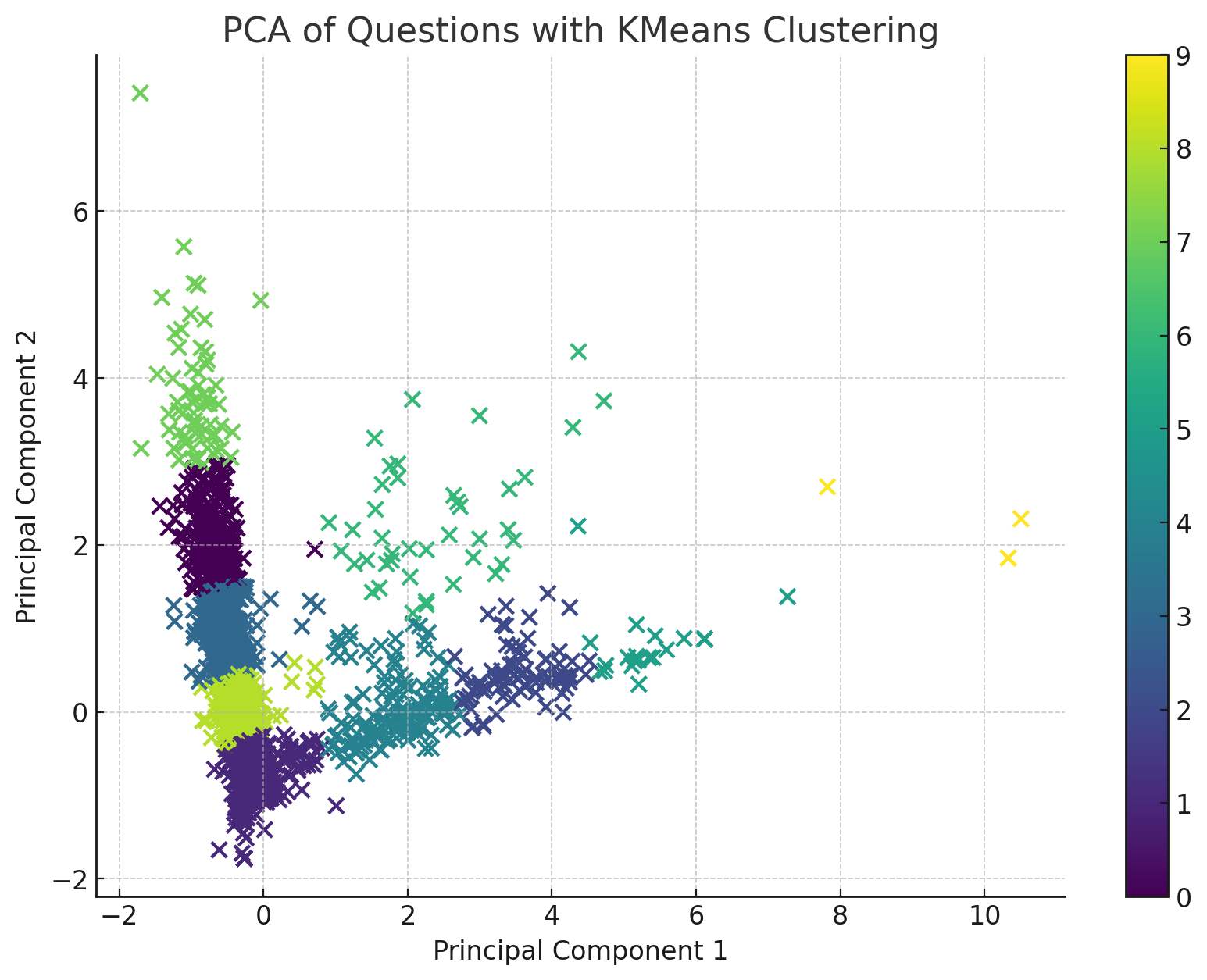

5 GPT Stand-alone Clutering Version 2

The first iteration is not quite what I wanted! I refined my prompt:

Hummm, that's not quite what i wanted. I want a clustering based on the

semantics of each question. Can you do that?Now its code uses K-means, but again, it’s not clear how the underlying data is derived.

from sklearn.decomposition import PCA

from sklearn.preprocessing import StandardScaler

import matplotlib.pyplot as plt

# Perform PCA to reduce dimensionality for better clustering visualization and to assist semantic clustering

pca = PCA(n_components=2)

principalComponents = pca.fit_transform(X.toarray())

# Standardize the components

scaler = StandardScaler()

principalComponents_scaled = scaler.fit_transform(principalComponents)

# Cluster using KMeans on the PCA-reduced data

kmeans_pca = KMeans(n_clusters=num_clusters, random_state=0).fit(principalComponents_scaled)

labels_pca = kmeans_pca.labels_

# Create a DataFrame with PCA components and labels for visualization

pca_df = pd.DataFrame(data=principalComponents_scaled, columns=['principal component 1', 'principal component 2'])

pca_df['cluster'] = labels_pca

# Plotting the clusters

plt.figure(figsize=(10, 7))

plt.scatter(pca_df['principal component 1'], pca_df['principal component 2'], c=pca_df['cluster'], cmap='viridis', s=50)

plt.title('PCA of Questions with KMeans Clustering')

plt.xlabel('Principal Component 1')

plt.ylabel('Principal Component 2')

plt.colorbar()

plt.show()

# Update the cluster stats with the PCA-based clustering

df['cluster_pca'] = labels_pca

# Calculate cluster statistics based on PCA clustering

cluster_stats_pca = df['cluster_pca'].value_counts().reset_index()

cluster_stats_pca.columns = ['cluster', 'num_questions']

cluster_stats_pca['proportion'] = cluster_stats_pca['num_questions'] / cluster_stats_pca['num_questions'].sum()

cluster_stats_pca['description'] = cluster_stats_pca['cluster'].apply(lambda x: get_top_terms(x))

# Select three examples from each PCA-based cluster

example_questions_pca = df.groupby('cluster_pca').apply(lambda x: x.sample(3, random_state=0) if len(x) >= 3 else x).reset_index(drop=True)

# Display updated cluster statistics

tools.display_dataframe_to_user("Cluster Statistics based on PCA", cluster_stats_pca)

# Prepare output with PCA-based clusters

cluster_examples_pca = {}

for cluster in range(num_clusters):

examples = example_questions_pca[example_questions_pca['cluster_pca'] == cluster]['question'].tolist()

cluster_examples_pca[cluster] = examples

# Format output

output_pca = []

for _, row in cluster_stats_pca.iterrows():

cluster = int(row['cluster'])

num_questions = row['num_questions']

description = row['description']

examples = cluster_examples_pca[cluster]

output_pca.append(f"### Cluster {cluster}: {description} ({num_questions} questions)")

for example in examples:

output_pca.append(f"- {example}")

output_text_pca = "\n".join(output_pca)

output_text_pca[:2000] # Display first 2000 characters of the output text to verifyRevised Answer

Here are the clustered questions based on their semantics:

Cluster Statistics based on PCA

| Cluster | Number of Questions | Proportion | Description |

|---|---|---|---|

| 1 | 1953 | 0.552 | does, file, mean, using, work |

| 8 | 552 | 0.156 | intro, para, pls, section, suggestions |

| 3 | 437 | 0.124 | function, python, using, want, write |

| 0 | 221 | 0.062 | data, python, tell, theory, using |

| 4 | 157 | 0.044 | algebra, generated, set, sets, sigma |

| 2 | 82 | 0.023 | command, file, use, want, windows |

| 7 | 63 | 0.018 | file, markdown, python, using, way |

| 6 | 55 | 0.015 | code, pls, python, using, want |

| 5 | 15 | 0.004 | algo, how, number, using, want |

| 9 | 2 | 0.001 | how, make, possible, using, write |

Cluster 1: does, file, mean, using, work (1953 questions)

- Interest in diamonds in this color soared when Ben gave Jen a big one set in her engagement ring.

- How?

- What is ecl?

Cluster 8: intro, para, pls, section, suggestions (552 questions)

- Pls write TikZ code for a node that contains a PDF image scaled to be 250 px x 250 px with no border or padding.

- Pls give explicit example where independence fails.

- Suggestions to clarify the following. Remember, I want an academic style. Clear and concise. Not using business jargon words like empowers or enables.

Cluster 3: function, python, using, want, write (437 questions)

- I’m running your compute_clip_features code and get…

- How do I use eero on Windows to set up a remote desktop connection?

- Going back to the SQL code, how do I check if the database contains a table called image_sets?

Cluster 0: data, python, tell, theory, using (221 questions)

- I meant to add the Python code to create the graphic using map, plot Lib.

- How do I link rather than importing a file?

- If I use click to make a CLI with main command…

Cluster 4: algebra, generated, set, sets, sigma (157 questions)

- Does Disqus integrate with Reddit?

- Crypto question. How does Tether work? Is it proof of stake or proof of work?

- Why does temperature increase by less than 12x suggested by PV/T = nR?

Cluster 2: command, file, use, want, windows (82 questions)

- What does risk-based rate approval (e.g., UK) mean?

- What does the no.arange statement do?

- Does that mean that you can “count” the reals using countable ordinals?

Cluster 7: file, markdown, python, using, way (63 questions)

- How do I use it?

- Really, it is a file name?

- I want to write a Python script to clean up a very large BibTeX file. The file is created by Mendeley and includes things like the abstract, which I don’t need. The abstract can also introduce subtle parsing errors, which means the file is not usable…

Cluster 6: code, pls, python, using, want (55 questions)

- What does this piece of code mean in Python?

- How does this function work in JavaScript?

- Can you explain what the following code does in Python?

Cluster 5: algo, how, number, using, want (15 questions)

- How does a binary search algorithm work?

- Can you explain how to implement a hash table in Python?

- What is the best way to sort a list of numbers in Python?

Cluster 9: how, make, possible, using, write (2 questions)

- How can I make a new user in Linux?

- Is there a way to write a script that automates the backup process using rsync?